What Happens When Middle Schoolers Build AI — and Take Responsibility for It

What Happens When Middle Schoolers Build AI — and Take Responsibility for It

Over six weeks, our Eagles trained machine learning models, connected them to code to build AI applications, explored real-world industries, debated ethics, and defended their work on stage.

Artificial Intelligence is no longer something coming “someday.” It is already here — quietly shaping what we see, what we buy, what we’re recommended, and sometimes even what opportunities we receive. The real question for education is not whether children will use AI. They will. The real question is whether they will understand it — and whether they will have the judgment to question it.

Beginning with responsibility, not tools

We didn’t start with flashy demos. We started with responsibility. If humans teach machines, what are humans responsible for? Our Eagles wrestled with the difference between traditional programming and machine learning, and quickly discovered why rule-based systems break in the real world. You can write rules for what counts as a “chair,” but reality will eventually hand you something that doesn’t fit the definition.

Machine learning exists because rules fail. Instead of writing explicit instructions, humans provide examples — and the machine finds patterns. But that shift introduces new problems. Patterns can feel intelligent without understanding anything. A system can be highly confident and completely wrong. A model can work perfectly in a controlled environment and fall apart in the real world.

We treated this quest as a judgment quest: not just “Can you make it work?” but “Should it be trusted?”

AI is already shaping real industries

Each Eagle investigated a real-world industry — medicine, education, transportation, hiring and employment, entertainment, security, and more. They explored how AI is already being used today, what kinds of data these systems rely on, who benefits, and who may be left out.

They created industry posters explaining current applications and plausible future expansions. But they didn’t stop there. Each Eagle also built a second poster focused on ethics, risk, and responsibility. They mapped possible harms, debated accountability, and defined boundaries: when humans must step in, when systems should be limited, and when AI should never be used at all.

AI is not neutral. It reflects the data, assumptions, and priorities of the humans who build and deploy it. The more powerful the tool, the more important the judgment behind it.

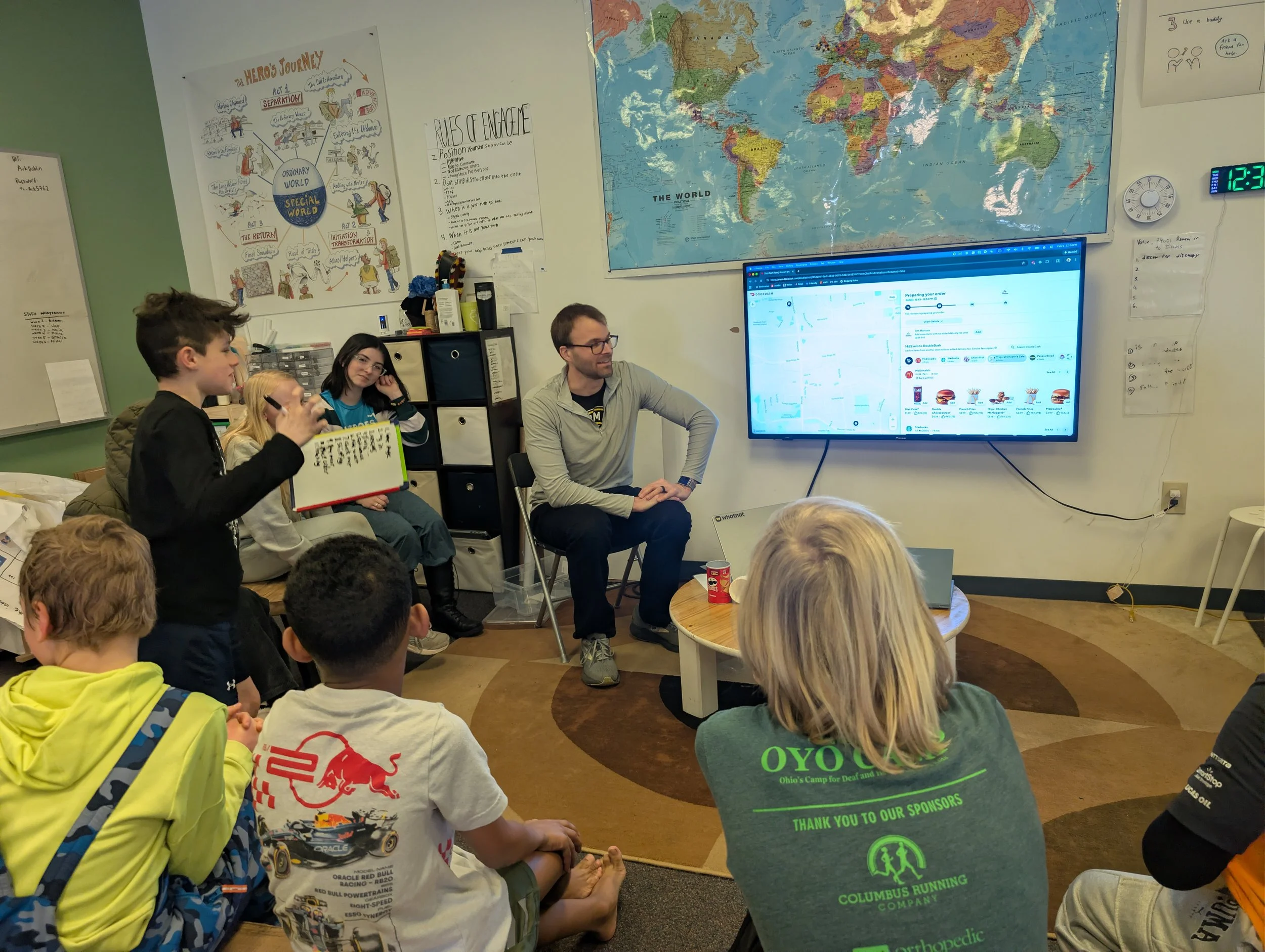

Learning from someone in the field

Midway through the quest, the Eagles met someone who lives this work daily: Stephen Bailey, a neuroscientist turned machine learning expert. Stephen didn’t give a vague talk about “the future of AI.” He showed learners what his work actually looks like — how models are evaluated, how decisions are made in practice, and what it means to move from theory to deployment.

One of the most memorable moments came when Stephen walked the Eagles through the DoorDash app — showing how machine learning shapes everything from recommendations to delivery timing to routing. Then he made it real in the best possible way: he ordered Timbits for the studio. The Eagles didn’t just enjoy a treat — they watched a real-world ML system make probabilistic decisions in real time.

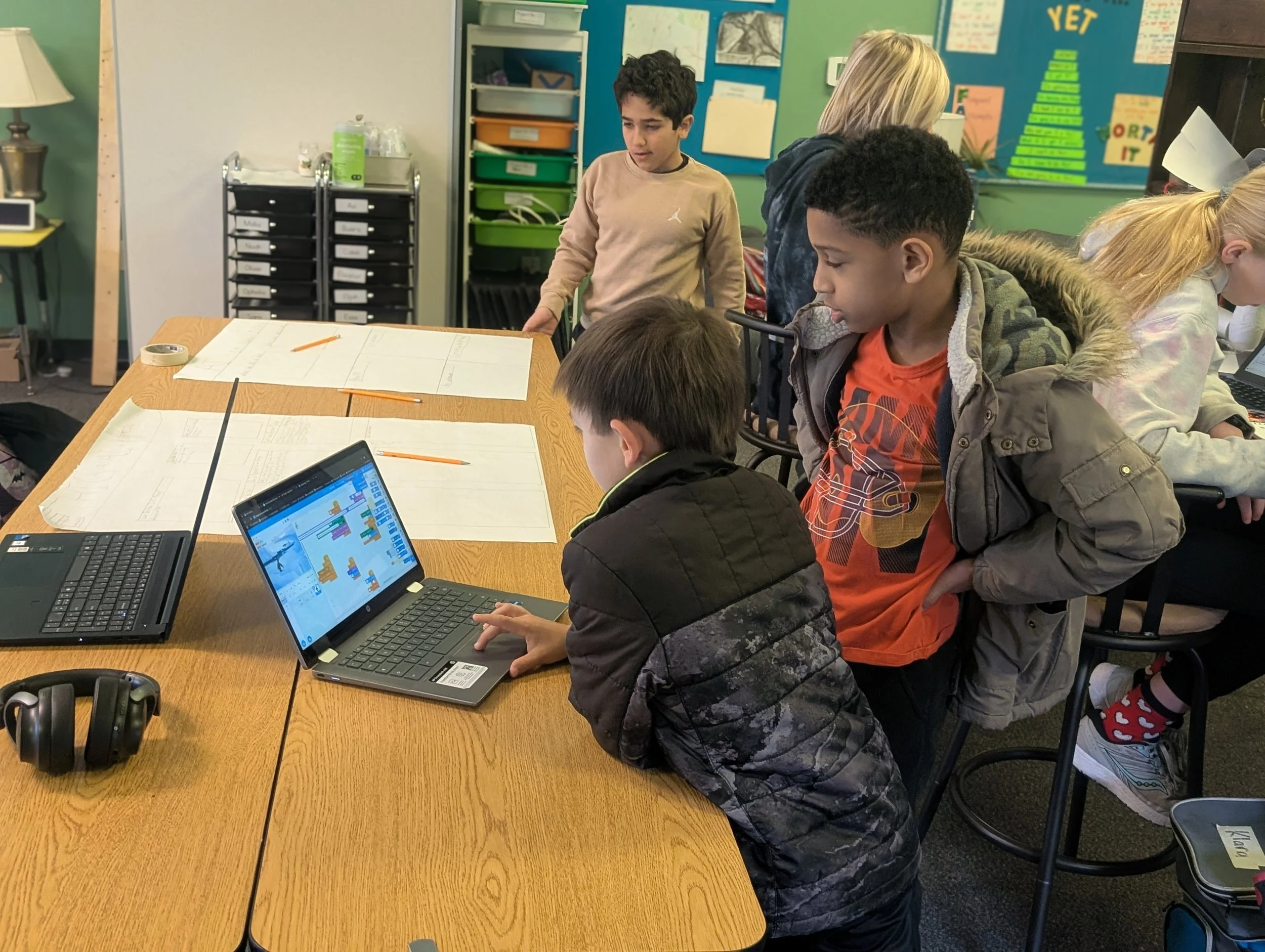

Building AI applications

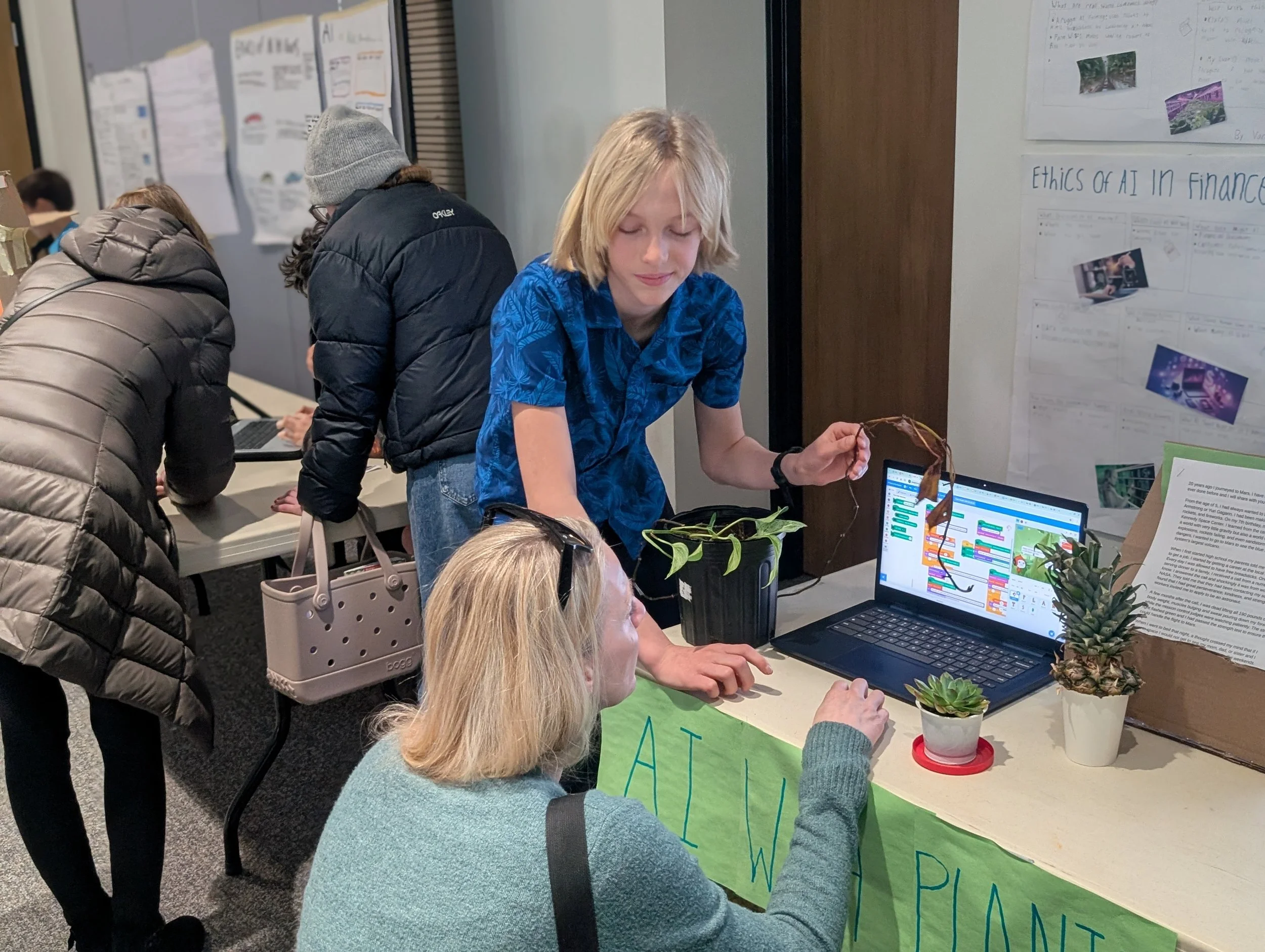

After weeks of research, discussion, and debate, the Eagles built. Every learner trained a machine learning model around a meaningful problem, then connected that model to code to build a functioning AI application. Among the apps: identifying healthy plants, recognizing sign language, and building interactive games powered by real-time predictions.

- Define what the model detects or classifies

- Collect and label training data

- Plan for variations (lighting, angles, different users, background noise)

- Test edge cases and failure modes

- Explain the difference between “the model” and “the code” that makes it an app

They learned quickly that models can be fragile. Lighting changes broke image classifiers. Noise confused audio models. Subtle changes in posture disrupted recognition. Models that worked perfectly with one learner failed when tested by another. They experienced “garbage in, garbage out” firsthand — and learned that data quality often matters more than the algorithm.

Failure was the curriculum

This quest was intentionally challenging. Models failed. Data sets were incomplete. Systems behaved unpredictably. Peers “broke” each other’s apps during testing. And instead of rescuing them, we leaned into the struggle. In the real world, AI systems fail — responsible builders anticipate that. Our Eagles learned to do the same.

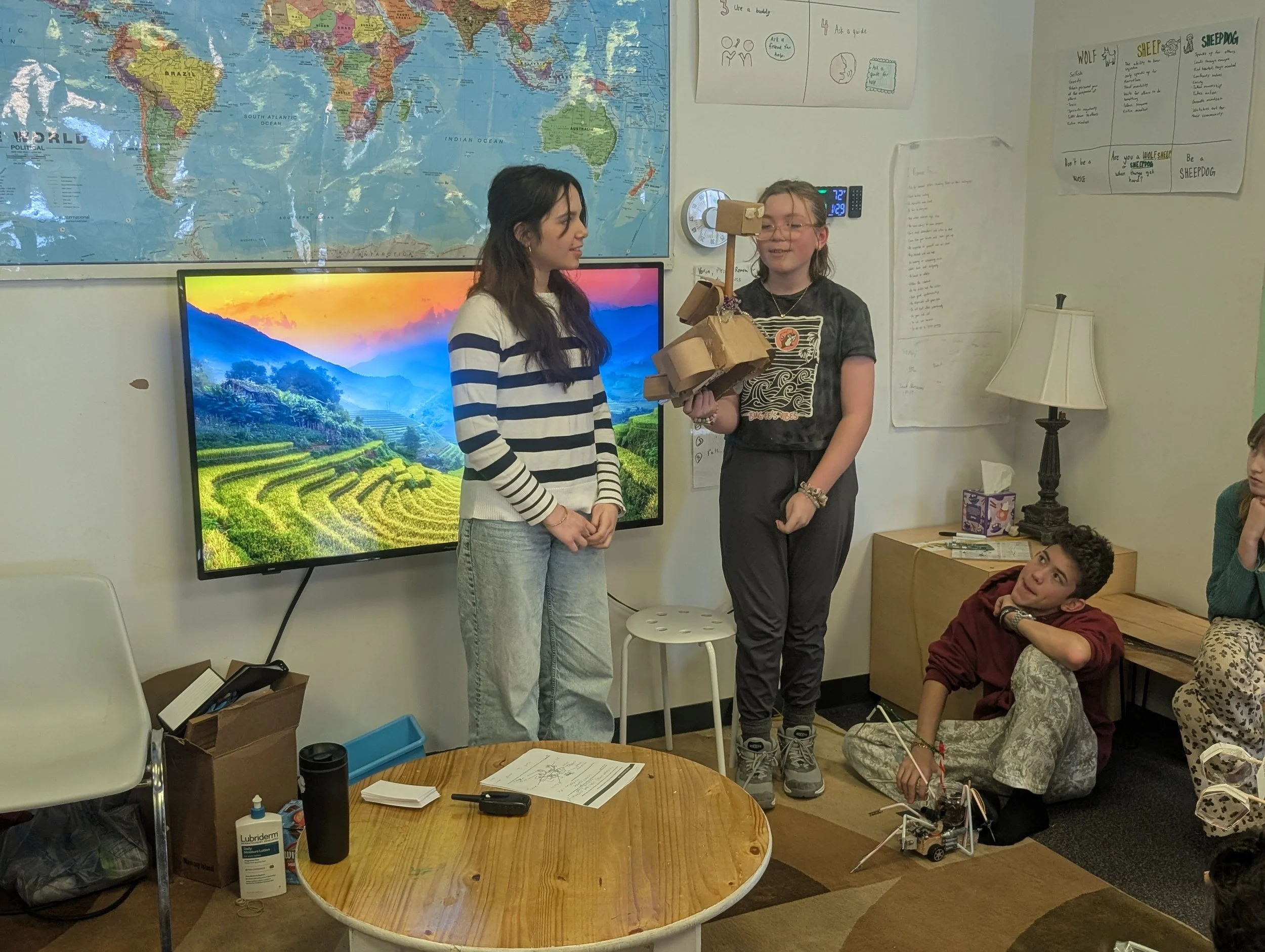

A public defense of their thinking

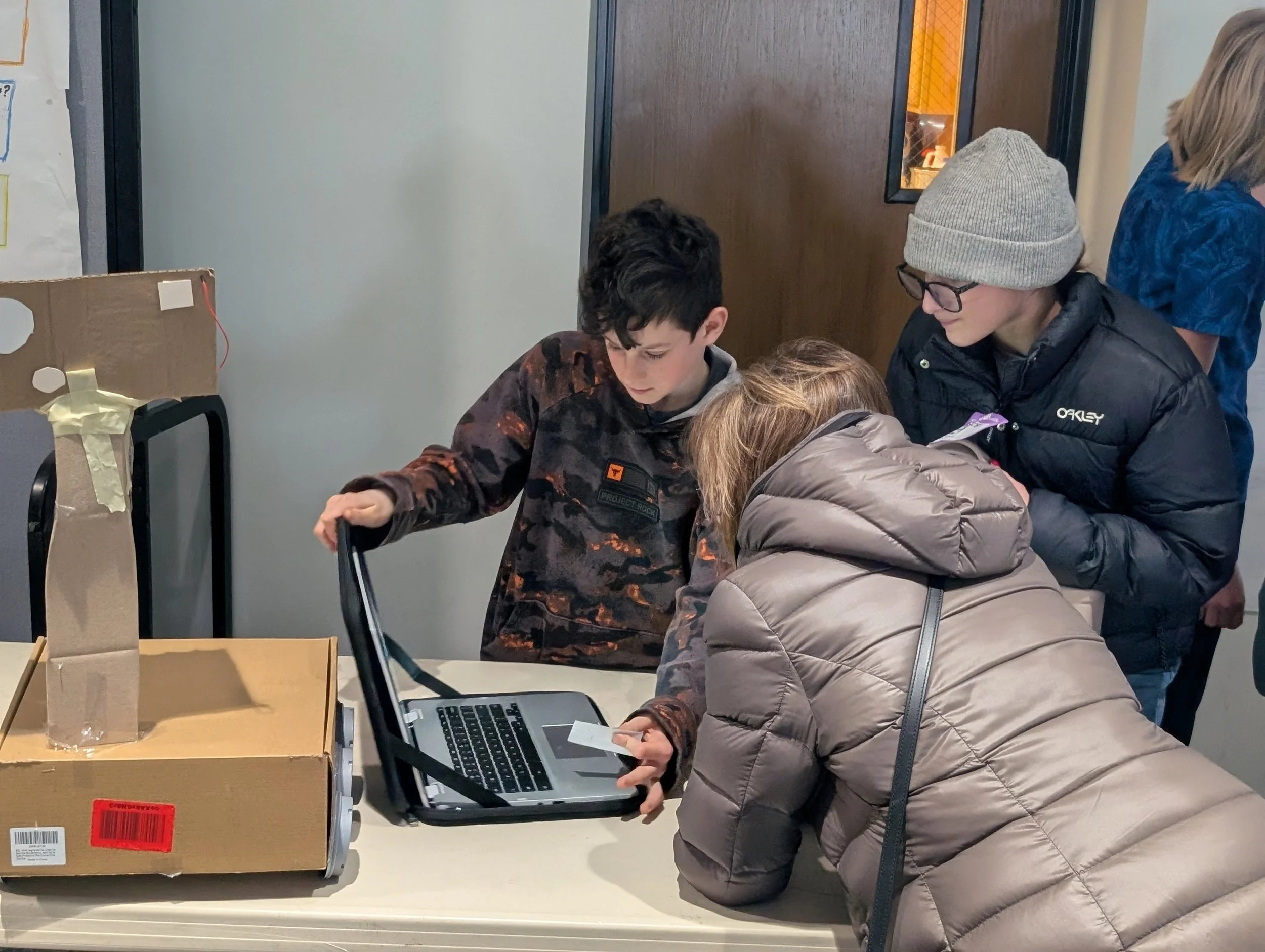

The quest culminated in an Interactive AI Playground and AI Gallery. Visitors didn’t just look at posters — they interacted with the Eagles’ apps, tested their models, and asked hard questions. Then each Eagle stepped on stage for a live Model Defense, explaining what the model does, how the app works, where it fails, and where humans must remain in control.

What they truly gained

Yes, the Eagles learned how machine learning works at a foundational level. Yes, they built AI applications. But the deeper learning went further. They learned that “smart” can be simulated without understanding, that confidence can mislead, that data choices shape outcomes, and that responsibility cannot be outsourced to machines.

They practiced explaining complex ideas clearly. They experienced agency — not by consuming technology, but by building and defending it. They learned to learn in uncertainty. And in a world increasingly influenced by AI, that may be the most important skill of all.

Want to see learning like this in action?

Acton Academy Columbus is a learner-driven microschool where children grow through meaningful challenges, real work, and strong character culture.